Image: Mark Hachman / IDG

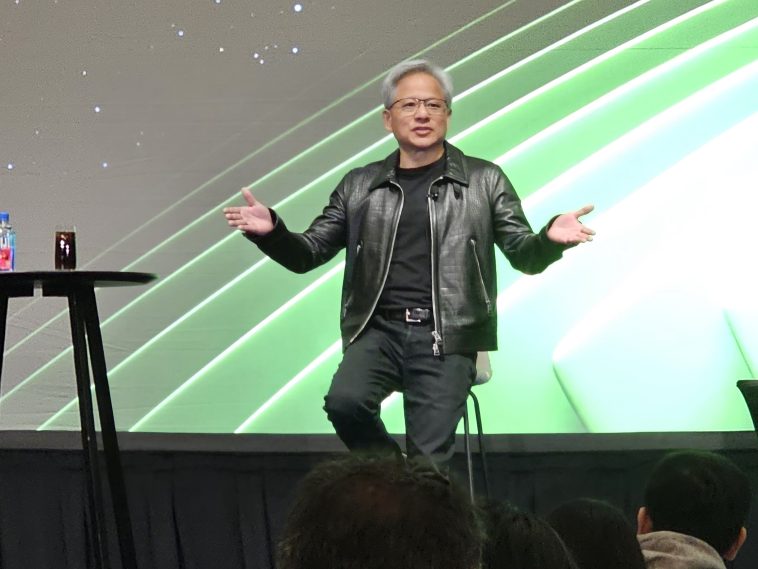

A day after launching the most hotly anticipated product in the PC world, the Nvidia GeForce 50-series family of graphics cards, Nvidia chief executive Jensen Huang appeared on stage at CES to answer reporters’ questions.

A key one: In a world where AI is increasingly used to generate or interpolate frames, is the end result a world in which PC graphics is entirely AI generated? No, Huang replied.

There’s a reason we asked Huang the question. Nvidia says that while DLSS 3 could inject AI-generated frames between every GPU-rendered frame, DLSS 4 can infer three full frames off of a single traditional frame, as Brad Chacos noted in our earlier report on the GeForce 50-series reveal.

A day earlier, rival AMD was essentially asked the same question. “Can I tell you that in the future, every pixel is going to be ML [machine learning]-generated? Yes, absolutely. It will be in the future,” AMD’s Frank Azor, its chief architect of gaming solutions, replied.

Huang disagreed. “No, he replied to the question, asked by PCWorld’s Adam Patrick Murray.

“The reason for that is because just remember when ChatGPT first came out, and we said, Oh, now let’s just generate the book. But nobody currently expects that.

“And the reason for that is because you need to give it credit,” Huang continued. “You need to give it — it’s called condition. We now condition the chat or the prompts with context. Before you can answer a question, you have to understand the process. The context could be PDF, the context could be a web search. The context could be you told it exactly what the context is, right?

“And so the same thing goes with video games. You have to give a context. And the context for video games has to not only be story-wise relevant, but it has to be spatial, world, spatially relevant. And so the way you condition, the way you give it context, is you give it some early pieces of geometry, or early pieces of textures, and it could generate, it could up the rest.”

In ChatGPT, the context is called Rapid Retrieval, Augmented Generations [RAG], the context which guides the textual output. “In the future, 3D graphics would be 3D grounded condition generation,” he said.

In DLSS 4, Nvidia’s GPU rasterization engine only renders one of out of the four forward-looking frames, Huang said. “And so out of four frames, 33 million pixels, we only rendered two [million]. Isn’t that a miracle?”

The key, Hunag said, is that they have to be rendered precisely: “precisely the right ones, and from that conditioning we can generate the others.”

“The same thing is going to happen in video games in the future I just described will be, will happen to not just the pixels that we render, but the geometry that we render, the animation that we render, you know, and the hair we render in future video games.”

Huang apologized if his explanation was poor, but concluded that there is still and will always be a role for artists and rendering in video games. “But it took that long for everybody to now realize that generative AI is really the future, but you need to condition, you need to ground with the author, the artists, [and the] intention.”

Author: Mark Hachman, Senior Editor, PCWorld

Mark has written for PCWorld for the last decade, with 30 years of experience covering technology. He has authored over 3,500 articles for PCWorld alone, covering PC microprocessors, peripherals, and Microsoft Windows, among other topics. Mark has written for publications including PC Magazine, Byte, eWEEK, Popular Science and Electronic Buyers’ News, where he shared a Jesse H. Neal Award for breaking news. He recently handed over a collection of several dozen Thunderbolt docks and USB-C hubs because his office simply has no more room.

GIPHY App Key not set. Please check settings